Poker Neural Network Example

Posted : admin On 7/27/2022By Matthew J. Simoneau, MathWorks and Jane Price, MathWorks

A look at a specific application using neural networks technology will illustrate how it can be applied to solve real-world problems. An interesting example can be found at the University of Saskatchewan, where researchers are using MATLAB and the Neural Network Toolbox to determine whether a popcorn kernel will pop. I am currently trying to create a neural network to predict poker hands, I am quite new to machine learning and neural networks and might need some help!I found some tutorials on how to create a neural network.

Inspired by research into the functioning of the human brain, artificial neural networks are able to learn from experience. These powerful problem solvers are highly effective where traditional, formal analysis would be difficult or impossible. Their strength lies in their ability to make sense out of complex, noisy, or nonlinear data. Neural networks can provide robust solutions to problems in a wide range of disciplines, particularly areas involving classification, prediction, filtering, optimization, pattern recognition, and function approximation.

A look at a specific application using neural networks technology will illustrate how it can be applied to solve real-world problems. An interesting example can be found at the University of Saskatchewan, where researchers are using MATLAB and the Neural Network Toolbox to determine whether a popcorn kernel will pop.

Knowing that nothing is worse than a half-popped bag of popcorn, they set out to build a system that could recognize which kernels will pop when heated by looking at their physical characteristics. The neural network learns to recognize what differentiates a poppable from an unpoppable kernel by looking at 16 features, such as roughness, color, and size.

The goal is to design a neural network that maps a set of inputs (the 16 features extracted from a kernel) to the proper output, in this case a 1 for popped, and -1 for unpopped. The first step is to gather this data from hundreds of kernels. To do this, the researchers extract the characteristics of each kernel using a machine vision system, then heat the kernel to see if it pops. This data, when combined with the proper learning algorithm, will be used to teach the network to recognize a good kernel from a bad one.

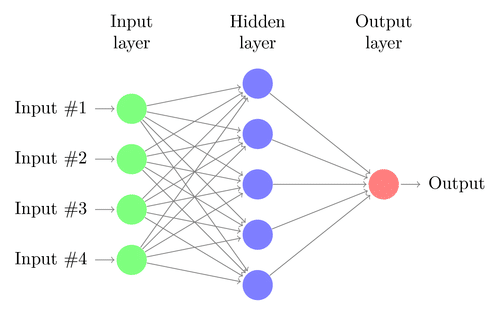

As the name suggests, a neural network is a collection of connected artificial neurons. Each artificial neuron is based on a simplified model of the neurons found in the human brain. The complexity of the task dictates the size and structure of the network. The popcorn problem requires a standard feed-forward network. An example of this type of network is shown in Figure 1. But the popcorn problem needs 16 inputs, 15 neurons in the first hidden layer, 35 in the second, and 1 output neuron. Each neuron has a connection to each of the neurons in the previous layer. Each of these connections has a weight that determines the strength of the coupling.

For this problem, the backpropagation algorithm guides the network's training. It holds the network's structure constant and modifies the weight associated with each connection. This is an iterative process that takes these initially random weights and adjusts them so the network will perform better after each pass through the data. Each set of features is presented to the neural network along with the corresponding desired output. The input signal propagates through the network and emerges at the output. The network's actual output is compared to the desired output to measure the network's performance. The algorithm then adjusts the weights to decrease this error. This training process continues until the network's performance can no longer improve.

The desired result is a neural network that is able to distinguish a poppable kernel from an unpoppable one. The key to the training is that the network doesn't just memorize specific kernels. Rather, it generalizes from the training sample and builds an internal model of which combinations of features determine “poppability.” The test, of course, is to give the network some data extracted from kernels it has never seen before and have it classify them. Illustrated in Figure 2, the network is correct three out of four times, providing the manufacturer with a method to significantly increase popcorn quality.

Neural network technology has been proven to excel in solving a variety of complex problems in engineering, science, finance, and market analysis. Examples of the practical applications of this technology are widespread. For example, NOW! Software uses the Neural Network Toolbox to predict prices in futures markets for the financial community. The model is able to generate highly accurate, next-day price predictions. Meanwhile researchers at Scientific Monitoring, Inc., are using MATLAB and the Neural Network Toolbox to apply a neural network-based, sensor validation system to a simulation of a turbofan engine. Their ultimate goal is to improve the time-limited dispatch of an aircraft by deferring engine sensor maintenance without a loss in operational safety or performance.

The latest release offers several new features, including new network types, learning and training algorithms, improved network performance, easier customization, and increased design flexibility.

- New modular network representation: all network properties can be easily customized and are collected in a single network object

- New reduced memory: Levenberg-Marquardt algorithm for handling very large problems

- New supervised networks - Generalized Regression - Probabilistic

- New network training algorithms

- Resilient Backpropogation (Rprop)

- Conjugate Gradient

- Two Quasi-Newton methods - Flexible and easy-to-customize network performance, initialization, learning and training functions

- Automatic creation of network simulation blocks for use with Simulink

- New training options - Automatic regularization - Training with validation - Early stopping

- New pre- and post-processing functions

Published 1998

Products Used

Learn More

Editor’s note: One of the central technologies of artificial intelligence is neural networks. In this interview, Tam Nguyen, a professor of computer science at the University of Dayton, explains how neural networks, programs in which a series of algorithms try to simulate the human brain work.

What are some examples of neural networks that are familiar to most people?

There are many applications of neural networks. One common example is your smartphone camera’s ability to recognize faces.

Neural Network C++ Code Example

Driverless cars are equipped with multiple cameras which try to recognize other vehicles, traffic signs and pedestrians by using neural networks, and turn or adjust their speed accordingly.

Neural networks are also behind the text suggestions you see while writing texts or emails, and even in the translations tools available online.

Does the network need to have prior knowledge of something to be able to classify or recognize it?

Yes, that’s why there is a need to use big data in training neural networks. They work because they are trained on vast amounts of data to then recognize, classify and predict things.

In the driverless cars example, it would need to look at millions of images and video of all the things on the street and be told what each of those things is. When you click on the images of crosswalks to prove that you’re not a robot while browsing the internet, it can also be used to help train a neural network. Only after seeing millions of crosswalks, from all different angles and lighting conditions, would a self-driving car be able to recognize them when it’s driving around in real life.

More complicated neural networks are actually able to teach themselves. In the video linked below, the network is given the task of going from point A to point B, and you can see it trying all sorts of things to try to get the model to the end of the course, until it finds one that does the best job.

Some neural networks can work together to create something new. In this example, the networks create virtual faces that don’t belong to real people when you refresh the screen. One network makes an attempt at creating a face, and the other tries to judge whether it is real or fake. They go back and forth until the second one cannot tell that the face created by the first is fake.

Humans take advantage of big data too. A person perceives around 30 frames or images per second, which means 1,800 images per minute, and over 600 million images per year. That is why we should give neural networks a similar opportunity to have the big data for training.

How does a basic neural network work?

A neural network is a network of artificial neurons programmed in software. It tries to simulate the human brain, so it has many layers of “neurons” just like the neurons in our brain. The first layer of neurons will receive inputs like images, video, sound, text, etc. This input data goes through all the layers, as the output of one layer is fed into the next layer.

Let’s take an example of a neural network that is trained to recognize dogs and cats. The first layer of neurons will break up this image into areas of light and dark. This data will be fed into the next layer to recognize edges. The next layer would then try to recognize the shapes formed by the combination of edges. The data would go through several layers in a similar fashion to finally recognize whether the image you showed it is a dog or a cat according to the data it’s been trained on.

These networks can be incredibly complex and consist of millions of parameters to classify and recognize the input it receives.

Why are we seeing so many applications of neural networks now?

Actually neural networks were invented a long time ago, in 1943, when Warren McCulloch and Walter Pitts created a computational model for neural networks based on algorithms. Then the idea went through a long hibernation because the immense computational resources needed to build neural networks did not exist yet.

Poker Neural Network Examples

Recently, the idea has come back in a big way, thanks to advanced computational resources like graphical processing units (GPUs). They are chips that have been used for processing graphics in video games, but it turns out that they are excellent for crunching the data required to run neural networks too. That is why we now see the proliferation of neural networks.

Neural Network Example Python

[Understand new developments in science, health and technology, each week.Subscribe to The Conversation’s science newsletter.]